1. The Setup

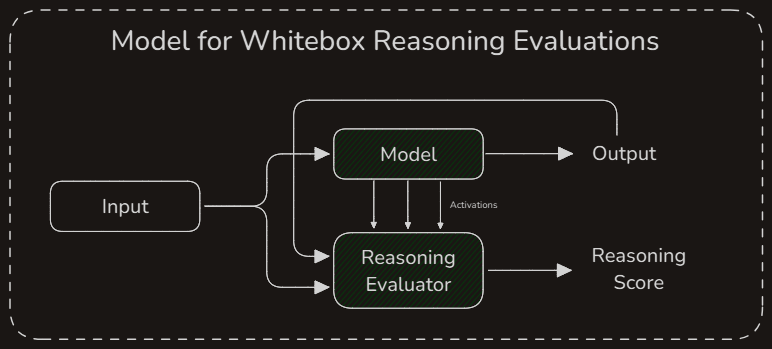

In this issue, we’ll finally discuss the first kind of reasoning evaluation for language models — whitebox evaluation. We’re essentially pitching a framework for checking model sanity.

Of course, this begs the question — what’s whitebox? Well, as the name would imply in stark contrast to its cousin, whiteboxes are ‘fully exposed’. In our context, this means you can check under the hood of the model you’re using. Activations, weights — nothing is unknown to you. In other words, if you’re running an AI-based application with your own in-house models, this issue’s for you.

A high level overview of our reasoning evaluation framework. By focusing on numerical results, it’s easier to automate.

The method we’re discussing is what’s known as mechanistic evaluation. The idea is that you look at patterns inside the model, figure out which ones have to do with its reasoning capabilities, and then observe them when the model is at work.

At the end of the process, we expect a numerical score describing reasoning. This makes it easier to automate reasoning assessment and sanity checking. Of course, there ought to be oversight depending on the sensitivity of the context.

The Antedote

Subscribe for regular doses on AI agents & LLMs.

2. Identification

Before you get to assessing reasoning, it’s important to know what exactly you’re reasoning for. Not all problems require reasoning — .

To keep things simple, we’re going to set a consistent definition for reasoning. By reasoning, we mean an answer that can be broken down into sequential, sound steps. In essence, we expect responses to answer both “what” and “why”.

As a basic example, let’s a consider school-level algebra.

A school-level algebra problem.

It’s not quite enough to know that the answer is 3 — it’s just as important to be able to show systematically that it is in-fact three.

Of course, the ‘style’ of reasoning steps varies a lot with domain. A model determining a potential real estate client’s ability to cover will answer in a manner different from that of one flagging a college history assignment for plagiarism.

Similarly, some domains require more scrutiny — a model determining insurance claims is going to be under far more microscopes.

We can’t account for all the nuances in this issue, but we can certainly present a general framework covering common business interests.

Anyhow, with a task identified, we can move onto the next step of the process.

3. The Cs of Reasoning

With problem in hand, here’s the framework for whitebox reasoning evaluation — we call it the Cs of Reasoning.

Our reasoning evaluation pipeline for whitebox contexts.

We summarize the steps involved as follows:

Create data — design a dataset that accurately describes the kind of reasoning you expect your model to perform.

enCode patterns — run your model on the designed dataset and numerically encode the activation patterns.

Capture semantics — use interpretability methods to understand what these encoded activation patterns correspond to, e.g. usage of physics terminology or words ending in ‘ed’.

Compare to needs — check how your model performs on the interpreted reasoning elements, and decide if the factors you care of are being emphasized by the model or not.

Step 2 of the pipeline is what makes the whole setup only suitable for whitebox models — you don’t have any activation patterns to study in a blackbox setting!

4. We Need Data

Let’s start with the first step — creating data. This entails creating a dataset of pairs of input scenarios and reasoned responses. These are the key examples your model should emulate in its behavior.

As an example, consider the following scenario — suppose we have an agent tracking companies for the purpose of deep value investing. If the agent is to be of any use, it must not only construct a diversified company portfolio, but also interpret and assess business documents to identify overlooked opportunities. Such an agent must necessarily justify its picks. To that end, we could use data on past successful investments so that our agent can try mimicking the general idea.

Of course, the elephant in the room is that we have to produce this dataset from scratch. If you’re particularly cheeky, you might think “Why can’t I just synthesize a dataset?” — the answer to that is that synthesizing a dataset by using another language model just shifts the reasoning problem elsewhere entirely!

In other words, you’d have to evaluate the reasoning capabilities of the model generating the synthetic dataset in the first place. Ergo, you’ll have to put in the work to kick things off.

Since there’s no shortage of scenarios requiring intelligent reasoning from models, and each scenario might prioritize certain qualities in answer justifications, we can’t quite define a ‘one size fits all’ metric for the quality of reasoning data. That said, a skeleton we’d suggest is the following:

The CCR Reasoning Matrix

We call it the CCR Reasoning Matrix. It focuses on what we believe are the common qualities outlining reasoning for agentic AI in business contexts.

Completeness — is the answer provided by the model a complete or incomplete response?

Concision — is the answer provided by the model efficiently stated or verbose?

Relevance — is the answer provided by the model composed entirely of relevant facts and ideas?

By checking how your model stacks up against the CCR matrix, you can identify which qualities it’s currently lacking in. You can use this information in the design of your context-aware dataset with varying scores on the matrix.

You can also extend the basic matrix — if you’re in the legal profession and you want an agentic assistant, your best bet is to include ‘citations’ into the matrix. Likewise, different fields will have additional expectations of their models. The matrix is flexible enough to accommodate!

5. Pattern Matching

This next step is more on the technical side. With the dataset in hand, you need to build something called a sparse autoencoder. In a nutshell, it’s an AI model that will stare at the activations of your model and try to make sense of them.

What we’ll do is run our model on the acquired dataset, and train the sparse autoencoder to find an efficient representation of the model’s activation patterns.

A visual example of encoding a neural network activation in 3D space.

With that we can move onto step three — trying to understand the semantics of these activations. By feeding the sparse autoencoder general activation patterns, we can make sense of the madness by trying to gauge what each output feature represents.

Let’s suppose we had an agent grading science reports. One of the features captured by the encoder might refer to Newtonian laws of physics, whilst another may indicate references to electromagnetism.

If we were instead in quantitative finance and trading, one of these features could mean the usage of the Greeks (e.g. the Vega number).

By toying around with the encoder, we can figure out what are the ‘building blocks’ of our model’s thought process and use it to better understand its behavior in business contexts.

A visual explanation of how our pipeline uses model activations.

6. Mic Check

The last step of the pipeline is seeing if what we’ve done so far accurately reflects what the model is up to. To this end, we can borrow techniques from AI validation such as ablation and activation patching.

The point of this is to make sure the patterns we encoded earlier do in-fact impact our model’s output in all the right ways. We want to ensure they accurately gauge our model on the CCR matrix.

Once that’s done, things are much simpler — borrow some basic statistics to whip up a formula that takes in results from the autoencoder and turns it into a number favoring the factors you’re concerned about.

If we have an agentic AI in real estate, we’d want to prioritize features that indicate things like adjacent development projects and gentrification. On the flip-side, we would want to ignore features of little-to-no relevance like how many times the letter “L” appears in a data sample.

The Antedote

Subscribe for regular doses on AI agents & LLMs.

7. Another Day

That’s not quite the end of the process — the best of things are those that lend themselves to systematic improvement, after all. Our pipeline is no different — you can continue to make improvements by tuning your autoencoder even further with more refined datasets. You can even add new elements to the CCR matrix without worrying!

You can also setup a system to visualize and assess the features extracted — as your model continues to work, you can use these to double check the model and somewhat see how it’s doing under the hood.

Once a system’s in place, improving it isn’t as much of a hassle.

8. A Few Remarks

This is of course contingent on the extent of how sensitive your business domain is to reasoning, but let it be clear that strategies like this aren’t a ‘get out of jail free’ card. In specific, you’ll need someone keeping an eye on things to make sure nothing’s gone haywire. As with most automation, you ought to view this as a tool and not as a replacement.

That said, our pipeline’s robust enough to accommodate modifications subject to your needs. Go wild!

With that, we’ve quite thoroughly discussed the high level details of investigating white-box reasoning capabilities. We’ll see you next time when we get to black-box settings!